Reasoning Revealed DeepSeek-R1, a Transparent Challenger To OpenAI O1

페이지 정보

작성자 Robbin Couture 댓글 0건 조회 8회 작성일 25-02-01 12:50본문

Llama 3.1 405B skilled 30,840,000 GPU hours-11x that utilized by deepseek ai china v3, for a mannequin that benchmarks barely worse. Mistral 7B is a 7.3B parameter open-supply(apache2 license) language model that outperforms much bigger models like Llama 2 13B and matches many benchmarks of Llama 1 34B. Its key innovations embody Grouped-query attention and Sliding Window Attention for efficient processing of lengthy sequences. As we now have seen throughout the weblog, it has been actually thrilling occasions with the launch of these 5 highly effective language fashions. All models are evaluated in a configuration that limits the output size to 8K. Benchmarks containing fewer than 1000 samples are examined a number of times using varying temperature settings to derive robust closing results. Some fashions struggled to follow through or offered incomplete code (e.g., Starcoder, CodeLlama). Starcoder (7b and 15b): - The 7b model supplied a minimal and incomplete Rust code snippet with solely a placeholder. 8b supplied a more complex implementation of a Trie data construction. Note that this is only one example of a extra superior Rust function that makes use of the rayon crate for parallel execution. • We will repeatedly iterate on the amount and quality of our coaching knowledge, and explore the incorporation of further training sign sources, aiming to drive information scaling throughout a extra comprehensive vary of dimensions.

In this text, we'll discover how to make use of a chopping-edge LLM hosted in your machine to attach it to VSCode for a robust free self-hosted Copilot or Cursor experience with out sharing any info with third-social gathering services. It then checks whether or not the end of the word was found and returns this data. Moreover, self-hosted solutions guarantee information privateness and safety, as sensitive data stays within the confines of your infrastructure. If I am building an AI app with code execution capabilities, resembling an AI tutor or AI knowledge analyst, E2B's Code Interpreter might be my go-to device. Imagine having a Copilot or Cursor various that is each free deepseek and non-public, seamlessly integrating with your improvement environment to supply actual-time code ideas, completions, and evaluations. GameNGen is "the first game engine powered totally by a neural mannequin that allows actual-time interplay with a fancy environment over lengthy trajectories at prime quality," Google writes in a analysis paper outlining the system.

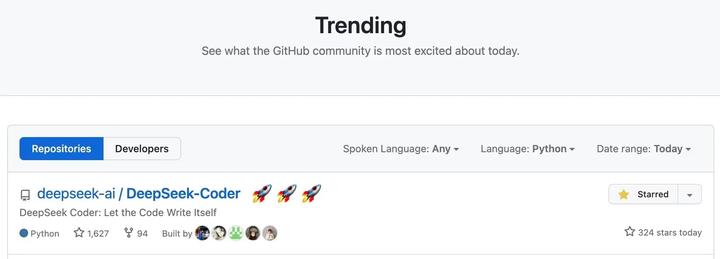

The sport logic will be further prolonged to incorporate additional options, comparable to particular dice or completely different scoring guidelines. What can DeepSeek do? Deepseek Coder V2 outperformed OpenAI’s GPT-4-Turbo-1106 and GPT-4-061, Google’s Gemini1.5 Pro and Anthropic’s Claude-3-Opus fashions at Coding. 300 million images: The Sapiens models are pretrained on Humans-300M, a Facebook-assembled dataset of "300 million diverse human photos. Starcoder is a Grouped Query Attention Model that has been educated on over 600 programming languages based mostly on BigCode’s the stack v2 dataset. 2. SQL Query Generation: It converts the generated steps into SQL queries. CodeLlama: - Generated an incomplete perform that aimed to process a list of numbers, filtering out negatives and squaring the results. Collecting into a new vector: The squared variable is created by accumulating the results of the map function into a new vector. Pattern matching: The filtered variable is created by using sample matching to filter out any destructive numbers from the input vector. Stable Code: - Presented a function that divided a vector of integers into batches using the Rayon crate for parallel processing.

The sport logic will be further prolonged to incorporate additional options, comparable to particular dice or completely different scoring guidelines. What can DeepSeek do? Deepseek Coder V2 outperformed OpenAI’s GPT-4-Turbo-1106 and GPT-4-061, Google’s Gemini1.5 Pro and Anthropic’s Claude-3-Opus fashions at Coding. 300 million images: The Sapiens models are pretrained on Humans-300M, a Facebook-assembled dataset of "300 million diverse human photos. Starcoder is a Grouped Query Attention Model that has been educated on over 600 programming languages based mostly on BigCode’s the stack v2 dataset. 2. SQL Query Generation: It converts the generated steps into SQL queries. CodeLlama: - Generated an incomplete perform that aimed to process a list of numbers, filtering out negatives and squaring the results. Collecting into a new vector: The squared variable is created by accumulating the results of the map function into a new vector. Pattern matching: The filtered variable is created by using sample matching to filter out any destructive numbers from the input vector. Stable Code: - Presented a function that divided a vector of integers into batches using the Rayon crate for parallel processing.

This function takes a mutable reference to a vector of integers, and an integer specifying the batch size. 1. Error Handling: The factorial calculation may fail if the input string can't be parsed into an integer. It uses a closure to multiply the result by each integer from 1 as much as n. The unwrap() technique is used to extract the result from the Result kind, which is returned by the perform. Returning a tuple: The function returns a tuple of the 2 vectors as its outcome. If a duplicate phrase is attempted to be inserted, the function returns without inserting anything. Each node additionally keeps track of whether or not it’s the tip of a phrase. It’s quite simple - after a very long dialog with a system, ask the system to write a message to the next model of itself encoding what it thinks it ought to know to finest serve the human working it. The insert method iterates over each character within the given word and inserts it into the Trie if it’s not already present. ’t verify for the tip of a word. End of Model enter. Something seems pretty off with this mannequin…

When you beloved this post in addition to you desire to obtain more details relating to ديب سيك generously stop by our webpage.

댓글목록

등록된 댓글이 없습니다.

전체상품검색

전체상품검색