If you'd like To Achieve Success In Deepseek, Listed here Are 5 Invalu…

페이지 정보

작성자 Shanice 댓글 0건 조회 23회 작성일 25-02-01 09:48본문

For this enjoyable check, DeepSeek was actually comparable to its finest-known US competitor. "Time will inform if the DeepSeek risk is actual - the race is on as to what technology works and how the massive Western players will reply and evolve," Michael Block, market strategist at Third Seven Capital, told CNN. If a Chinese startup can construct an AI mannequin that works simply in addition to OpenAI’s latest and biggest, and do so in below two months and for lower than $6 million, then what use is Sam Altman anymore? Can DeepSeek Coder be used for business purposes? DeepSeek-R1 collection assist business use, enable for any modifications and derivative works, including, but not restricted to, distillation for coaching other LLMs. From the outset, it was free for industrial use and absolutely open-source. DeepSeek has change into probably the most downloaded free app within the US simply a week after it was launched. Later, on November 29, 2023, DeepSeek launched DeepSeek LLM, described as the "next frontier of open-source LLMs," scaled up to 67B parameters.

For this enjoyable check, DeepSeek was actually comparable to its finest-known US competitor. "Time will inform if the DeepSeek risk is actual - the race is on as to what technology works and how the massive Western players will reply and evolve," Michael Block, market strategist at Third Seven Capital, told CNN. If a Chinese startup can construct an AI mannequin that works simply in addition to OpenAI’s latest and biggest, and do so in below two months and for lower than $6 million, then what use is Sam Altman anymore? Can DeepSeek Coder be used for business purposes? DeepSeek-R1 collection assist business use, enable for any modifications and derivative works, including, but not restricted to, distillation for coaching other LLMs. From the outset, it was free for industrial use and absolutely open-source. DeepSeek has change into probably the most downloaded free app within the US simply a week after it was launched. Later, on November 29, 2023, DeepSeek launched DeepSeek LLM, described as the "next frontier of open-source LLMs," scaled up to 67B parameters.

That call was certainly fruitful, and now the open-source household of fashions, including DeepSeek Coder, DeepSeek LLM, DeepSeekMoE, DeepSeek-Coder-V1.5, DeepSeekMath, DeepSeek-VL, DeepSeek-V2, DeepSeek-Coder-V2, and DeepSeek-Prover-V1.5, can be utilized for a lot of purposes and is democratizing the utilization of generative models. Together with DeepSeek’s R1 model being able to clarify its reasoning, it relies on an open-source household of fashions that may be accessed on GitHub. OpenAI, DeepSeek’s closest U.S. That is why the world’s most powerful models are either made by massive company behemoths like Facebook and Google, or by startups which have raised unusually giant amounts of capital (OpenAI, Anthropic, XAI). Why is DeepSeek so important? "I wouldn't be surprised to see the DOD embrace open-supply American reproductions of DeepSeek and Qwen," Gupta said. See the 5 features at the core of this course of. We attribute the state-of-the-artwork performance of our models to: (i) largescale pretraining on a big curated dataset, which is particularly tailor-made to understanding people, (ii) scaled highresolution and high-capability imaginative and prescient transformer backbones, and (iii) excessive-high quality annotations on augmented studio and artificial information," Facebook writes. Later in March 2024, DeepSeek tried their hand at vision models and launched DeepSeek-VL for high-quality imaginative and prescient-language understanding. In February 2024, DeepSeek launched a specialized mannequin, DeepSeekMath, with 7B parameters.

That call was certainly fruitful, and now the open-source household of fashions, including DeepSeek Coder, DeepSeek LLM, DeepSeekMoE, DeepSeek-Coder-V1.5, DeepSeekMath, DeepSeek-VL, DeepSeek-V2, DeepSeek-Coder-V2, and DeepSeek-Prover-V1.5, can be utilized for a lot of purposes and is democratizing the utilization of generative models. Together with DeepSeek’s R1 model being able to clarify its reasoning, it relies on an open-source household of fashions that may be accessed on GitHub. OpenAI, DeepSeek’s closest U.S. That is why the world’s most powerful models are either made by massive company behemoths like Facebook and Google, or by startups which have raised unusually giant amounts of capital (OpenAI, Anthropic, XAI). Why is DeepSeek so important? "I wouldn't be surprised to see the DOD embrace open-supply American reproductions of DeepSeek and Qwen," Gupta said. See the 5 features at the core of this course of. We attribute the state-of-the-artwork performance of our models to: (i) largescale pretraining on a big curated dataset, which is particularly tailor-made to understanding people, (ii) scaled highresolution and high-capability imaginative and prescient transformer backbones, and (iii) excessive-high quality annotations on augmented studio and artificial information," Facebook writes. Later in March 2024, DeepSeek tried their hand at vision models and launched DeepSeek-VL for high-quality imaginative and prescient-language understanding. In February 2024, DeepSeek launched a specialized mannequin, DeepSeekMath, with 7B parameters.

Ritwik Gupta, who with several colleagues wrote one of many seminal papers on building smaller AI fashions that produce huge outcomes, cautioned that a lot of the hype around DeepSeek reveals a misreading of exactly what it's, which he described as "still a giant mannequin," with 671 billion parameters. We current DeepSeek-V3, a strong Mixture-of-Experts (MoE) language model with 671B total parameters with 37B activated for every token. Capabilities: Mixtral is a sophisticated AI mannequin using a Mixture of Experts (MoE) structure. Their revolutionary approaches to consideration mechanisms and the Mixture-of-Experts (MoE) approach have led to spectacular effectivity good points. He advised Defense One: "DeepSeek is a wonderful AI development and an ideal instance of Test Time Scaling," a method that increases computing power when the mannequin is taking in data to provide a brand new end result. "DeepSeek challenges the idea that larger scale models are always more performative, which has necessary implications given the security and privacy vulnerabilities that include building AI fashions at scale," Khlaaf stated.

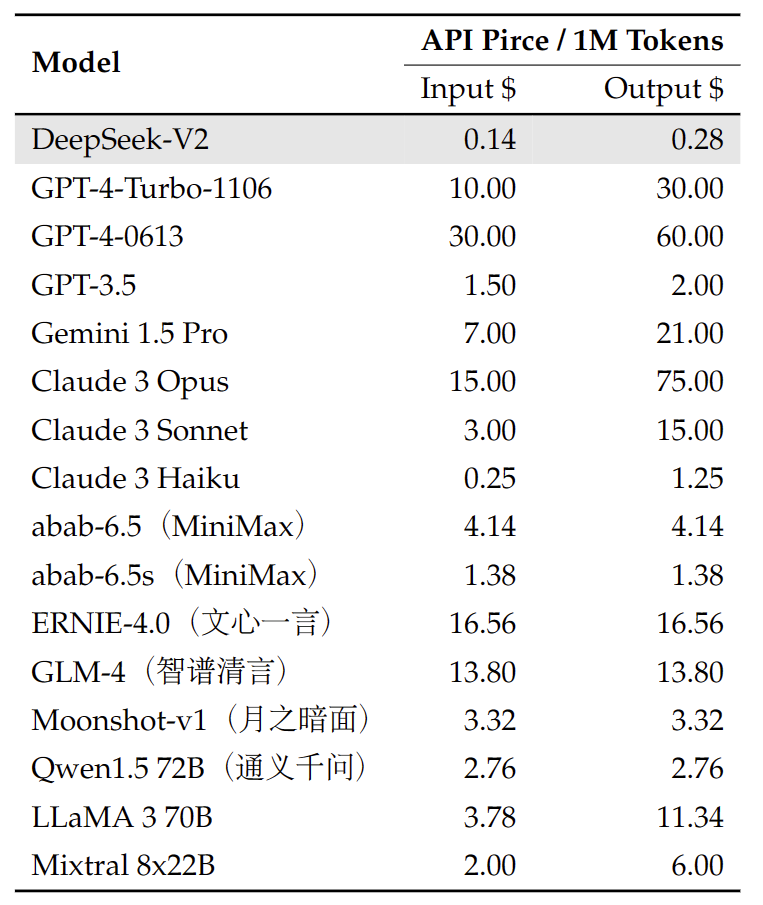

"DeepSeek V2.5 is the actual finest performing open-supply mannequin I’ve examined, inclusive of the 405B variants," he wrote, additional underscoring the model’s potential. And it may be helpful for a Defense Department tasked with capturing the most effective AI capabilities while concurrently reining in spending. DeepSeek’s performance-insofar as it shows what is possible-will give the Defense Department extra leverage in its discussions with trade, and permit the division to deep seek out more opponents. DeepSeek's claim that its R1 artificial intelligence (AI) mannequin was made at a fraction of the price of its rivals has raised questions on the future about of the whole industry, and caused some the world's greatest firms to sink in value. For general questions and discussions, please use GitHub Discussions. A basic use mannequin that combines advanced analytics capabilities with an enormous 13 billion parameter rely, enabling it to perform in-depth knowledge evaluation and support complicated determination-making processes. OpenAI and its partners just introduced a $500 billion Project Stargate initiative that might drastically speed up the construction of green energy utilities and AI information centers across the US. It’s a analysis mission. High throughput: DeepSeek V2 achieves a throughput that's 5.76 times higher than DeepSeek 67B. So it’s capable of producing text at over 50,000 tokens per second on commonplace hardware.

In case you adored this post in addition to you would like to obtain guidance concerning deep seek kindly check out our web page.

- 이전글لسان العرب : طاء - 25.02.01

- 다음글9 The Reason why You are Still An Amateur At Deepseek 25.02.01

댓글목록

등록된 댓글이 없습니다.

전체상품검색

전체상품검색